Why Correlation Isn’t Causation in Brain Data

Why do correlations between brain signals not suggest causal relations?

Why do correlations between brain signals not suggest causal relations?

As humans, we naturally think of the world in terms of cause and effect: a laundry ticket scores us our laundry at the shop around the corner. And as neuroscientists, we are naturally drawn to the idea of mapping cause and effect. Early in my career, I was thrilled by the prospect of using brain signals to decode how neurons interact (https://pmc.ncbi.nlm.nih.gov/articles/PMC2706692/). The dream was seductive: uncover the mechanistic wiring of the brain, where one neuron’s firing could be seen as directly influencing another, neatly captured by our data. In a field that craves causal explanations, an algorithm capable of translating noisy signals into definitive causal maps would be nothing short of revolutionary.

But here’s where the reality — and the statistics — step in.

The Allure of Causation

At its core, neuroscience is a quest to explain behavior in terms of underlying mechanisms. We long to tell stories of neurons “talking” to one another, of brain regions orchestrating complex tasks. This narrative isn’t just appealing; it’s deeply woven into our scientific culture. However, as I delved deeper into the statistical methods underpinning data analysis, I came to understand that the observed correlations in brain signals are far from a straightforward window into causation.

Imagine two neurons that often fire in close succession. The immediate reaction might be to assume one is triggering the other. Yet, the brain is an enormously interconnected network. The signal we measure from any one neuron is influenced not only by its direct inputs but also by the myriad of hidden variables — other neurons, glial cells, or even systemic fluctuations — that we simply can’t record. These unobserved factors are what statisticians call confounders.

The Hidden World of Confounders

Let’s consider a simplified linear model:

Y=Xβ+Zγ+ε

Here, X represents the observed neural source signals, Y are the neural targets we’re interested in (perhaps the activity of a downstream neuron or a behavioral measure, often we ask how neurons influence one another: Y=X), and Z encapsulates all the unrecorded confounders. The coefficient β would be the true causal effect of X on Y if we could somehow isolate it from the rest of the brain. But a key challenge arises because we don’t observe Z.

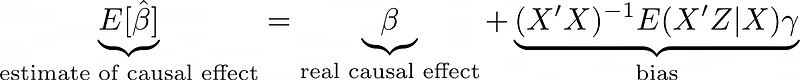

When we perform a regression that omits Z, our estimate of β is biased. In statistical terms, this is known as omitted variable bias (OVB, https://en.wikipedia.org/wiki/Omitted-variable_bias). The bias is roughly given by:

This equation tells us that if the influence of the unobserved confounders (captured by Zγ) is comparable in magnitude to — or worse, vastly exceeds — the influence of our observed signals, then our estimate of β will be fundamentally flawed. And in the context of brain recordings, this is exactly the problem we face. We might record on the order of 10³ neurons or signals, but the brain contains of the order of 86 Billion interacting elements. It is like wanting to understand all people inthe world by just talking to your twitter friends. The sheer number of unobserved variables dwarfs the signals we measure, making it unlikely that the apparent correlations truly reflect direct causal interactions.

Now, practitioners in the field may argue that they are often not using raw correlations. They use advanced algorithms like Directed Information (https://link.springer.com/book/10.1007/978-3-642-54474-3) or Granger causality (https://www.scholarpedia.org/article/Granger_causality). However, if you write out the versions of the OVB equation for these methods (or any other methods that I am aware of) things do not look better. We can also look at other complex algorithms like the “deconfounder” (https://arxiv.org/abs/1805.06826) which instead make strong data assumptions that are far from reality (e.g. conditional independences). Algorithms can fundamentally not undo the problems that come from omitted variable biases.

Implications Across Fields

This isn’t a problem unique to neuroscience. Whether we’re examining economic indicators, ecosystem dynamics, or medical data, the leap from correlation to causation is perilous. Bryan Johnson, known for his ambitious efforts in human rejuvenation, aims to make his biomarkers indistinguishable from those of a young person(https://en.wikipedia.org/wiki/Bryan_Johnson). Correlationally, he is perfect. Causally, it is very unclear if he will live longer. Just because two variables move in tandem doesn’t mean one drives the other. It does not even necessarily make it meaningfully more likely that one drives the other.

The allure of a “holy grail” algorithm that transforms correlation into causation is powerful but, in practice, remains unattainable.

Embracing the Limitations

In a world full of confounders, is it possible for us to know how things work? Obviously, it is. We walk around, pick up our laundry, and seem to be doing just fine, despite the fact that, in a way, causality is impossible in heavily confounded systems. How can we? Well, we built a world that is causally relatively simple. We learn how the world works, causally, from one another. We randomly make mistakes (like forgetting a laundry ticket), which teach us insights into the causal nature of the world. When we can interfere with the world, learning about causality is not so hard after all. And within neuroscience, the causal insights we have come, by and large, from perturbation studies. Let us celebrate the many deep insights we have obtained from the carefully controlled perturbation studies that have been part of neuroscience since the beginning.

“But Konrad, aren’t there wonderful correlational studies?”

There are plenty of truly exciting correlational studies. Some describe statistics of data (e.g. 1/f spectra). Some write about coding (which features are there?). Others do engineering (e.g. brain decoding). It should also be mentioned that sometimes causation in brains may be correlationally measurable, e.g. in molecularly annotated connectomics where the causal elements of a system may directly be observable. The problem is not that correlational studies are not interesting, it is when we start selling them as causal.

Let us stop pretending correlation is causation

Correlational studies are interesting. Let us simply stop pretending they get at causality. Functional connectivity, as it’s often measured, is essentially just a correlation matrix — which is fine as long as we don’t mistake it for a direct measure of causation. It is neither functional nor a sign of connectivity. Let us all strive for a more precise use of causal terms.